-

Notifications

You must be signed in to change notification settings - Fork 1.4k

Possible Issue with PulsarPointsRenderer() #772

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Comments

|

Ah right okay so PerspectiveCamera() doesn't require a focal_length if you provide K, but it will still use the default argument focal length of 1 (not the focal length derived from the K camera calibration matrix). This should probably be changed. Still not sure why PulsarRenderer spheres seem so much more 'solid' when using the perspectivecamera, than the pytorch3d renderer. Is this some kind of rasterizer behavior? |

If |

@classner Any idea on this potential discrepancy? |

|

Hi @jaymefosa ! If you're using on master (!! there was a bug in the latest release that prevented fully accurate conversion), the resulting picture should be the same (except for very few pixel-wise differences) in the sense that the spheres should be exactly in the sample place and have exactly the same radius. If you mean with 'solid' the amount of blending with the background, then this fully depends on the 'gamma' parameter for the pulsar renderer (i.e., how hard the blending is). This is completely independent on how PyTorch3D does its rasterization and blending and does not necessarily match. Gamma values close to 1.0 give you the softest blending, gamma values close to 1e-5 give you the hardest blending. Let me know if this answers your question! :) |

|

@jaymefosa do you still need help with this issue? |

|

@nikhilaravi @classner Thanks very much! |

I've been trying to get Pulsar to render the same output as P3D with perspective cameras and would very much appreciate if someone could take a look and point out an obvious misstep.

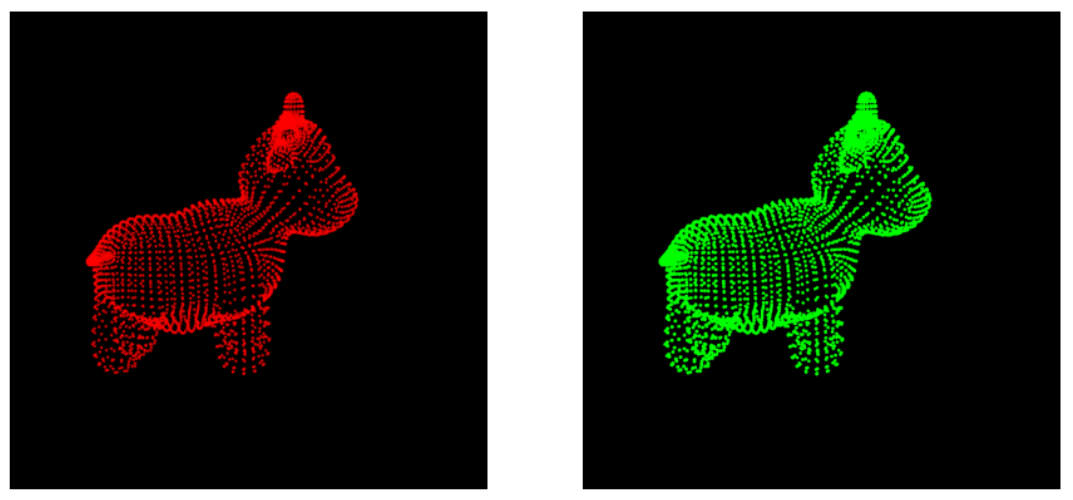

Below is an image of the output from FOVPerspectiveCameras for both Pytorch3d and Pulsar renderers:

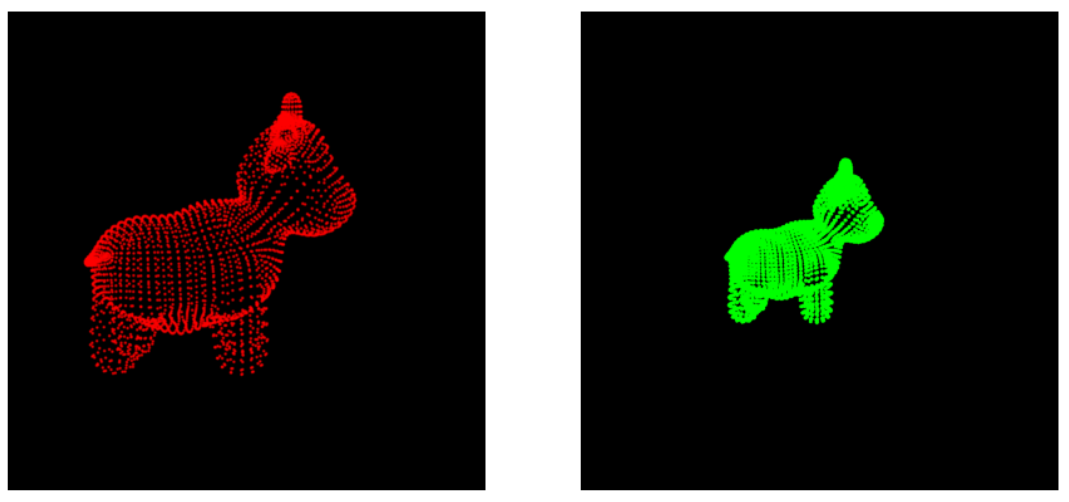

And here is the image generated when switching over to PerspectiveCameras

The following code is self contained and reproduces the above diagrams

The text was updated successfully, but these errors were encountered: