-

-

Notifications

You must be signed in to change notification settings - Fork 4.8k

Cache grows when not using enableSingleSchemaCache #4247

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Comments

|

@flovilmart any updates on this issue ? Still looking for someone to take this up ? The label good first issue is tempting me if you can give some courage |

|

Yes that should be an easy one, the suggested resolution should be solid at the moment, and it will give you a good insight on the underskirts of parse-server :) If you run into any issue, just let me know! |

|

Yeah we need to address this. I took a look at some of the code at one point. Haven't confirmed it but it does just seem to be a bad case of not cleaning up the multitude of schema caches that accumulate over time. Interesting code being here in SchemaCache.js. Over time with random prefix ids I imagine you could fill up memory no sweat. One way would be to track formerly used caches (particularly stale?) and flush those after a certain count. Maybe a cache of existing cache prefixes that still exist so we can keep track of them? |

|

The issue is that: We could manually prune the cache just after each requests or periodically, and/or when the request is over; remove the key associated in the cache with it. |

|

Any fix for this so far? |

|

It reduces the cache sizes for sure |

|

I suppose a reduced cache size = reduced performance? |

|

Nope increased performance. But may yield issues if multiple Schema mutations are run concurrently |

|

The _Schema query has been in our slow queries tab on mLab for a while. Will setting this affect the number of _Schema queries, or only the memory due to caching the results? Edit - I found this in Definitions.js So it should also reduce the number of queries run. |

|

Yes that’s what it’s meant for |

|

Closing via #5612 |

Issue Description

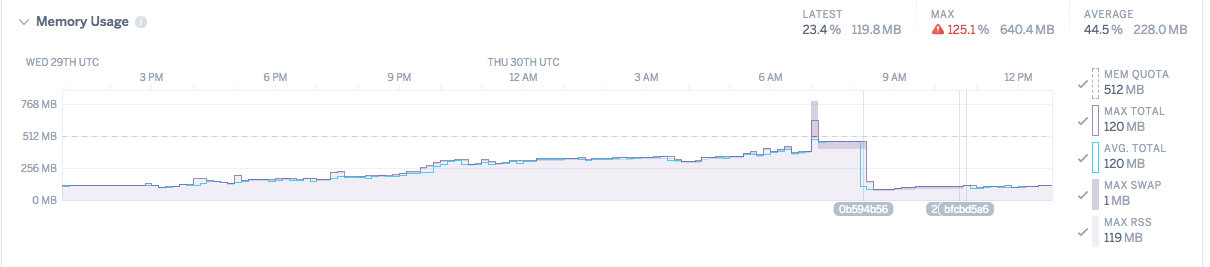

It has been observer that the new caching mechanism (LRUCache) would not prune proactively it's cache after a certain amount of time, leading to memory growth and explosion.

Steps to reproduce

require('heapdump')in your startup scriptExpected Results

Memory usage should somewhat remain constant, unused data should not be kept indefinitely.

Environment Setup

Server

Database

Logs/Trace

See discussion over: #4235

Suggested resolution:

1- make singleSChemaCache default (this should be pretty harmless)

2- actively cleanup upon response end the schema cache used for the request

3- actively prune LRU cache dropping older values periodically

The text was updated successfully, but these errors were encountered: