diff --git a/docs/source/_static/img/llama_ios_app.mp4 b/docs/source/_static/img/llama_ios_app.mp4

index 2f5df08984d..b4bf23cfdf6 100644

Binary files a/docs/source/_static/img/llama_ios_app.mp4 and b/docs/source/_static/img/llama_ios_app.mp4 differ

diff --git a/docs/source/_static/img/llama_ios_app.png b/docs/source/_static/img/llama_ios_app.png

index d9088abc4f9..fff399cfe1d 100644

Binary files a/docs/source/_static/img/llama_ios_app.png and b/docs/source/_static/img/llama_ios_app.png differ

diff --git a/examples/demo-apps/apple_ios/LLaMA/README.md b/examples/demo-apps/apple_ios/LLaMA/README.md

index 6fa663d9fce..12bc50338ed 100644

--- a/examples/demo-apps/apple_ios/LLaMA/README.md

+++ b/examples/demo-apps/apple_ios/LLaMA/README.md

@@ -1,100 +1,47 @@

# ExecuTorch Llama iOS Demo App

-**[UPDATE - 10/24]** We have added support for running quantized Llama 3.2 1B/3B models in demo apps on the [XNNPACK backend](https://github.com/pytorch/executorch/blob/main/examples/demo-apps/apple_ios/LLaMA/docs/delegates/xnnpack_README.md). We currently support inference with SpinQuant and QAT+LoRA quantization methods.

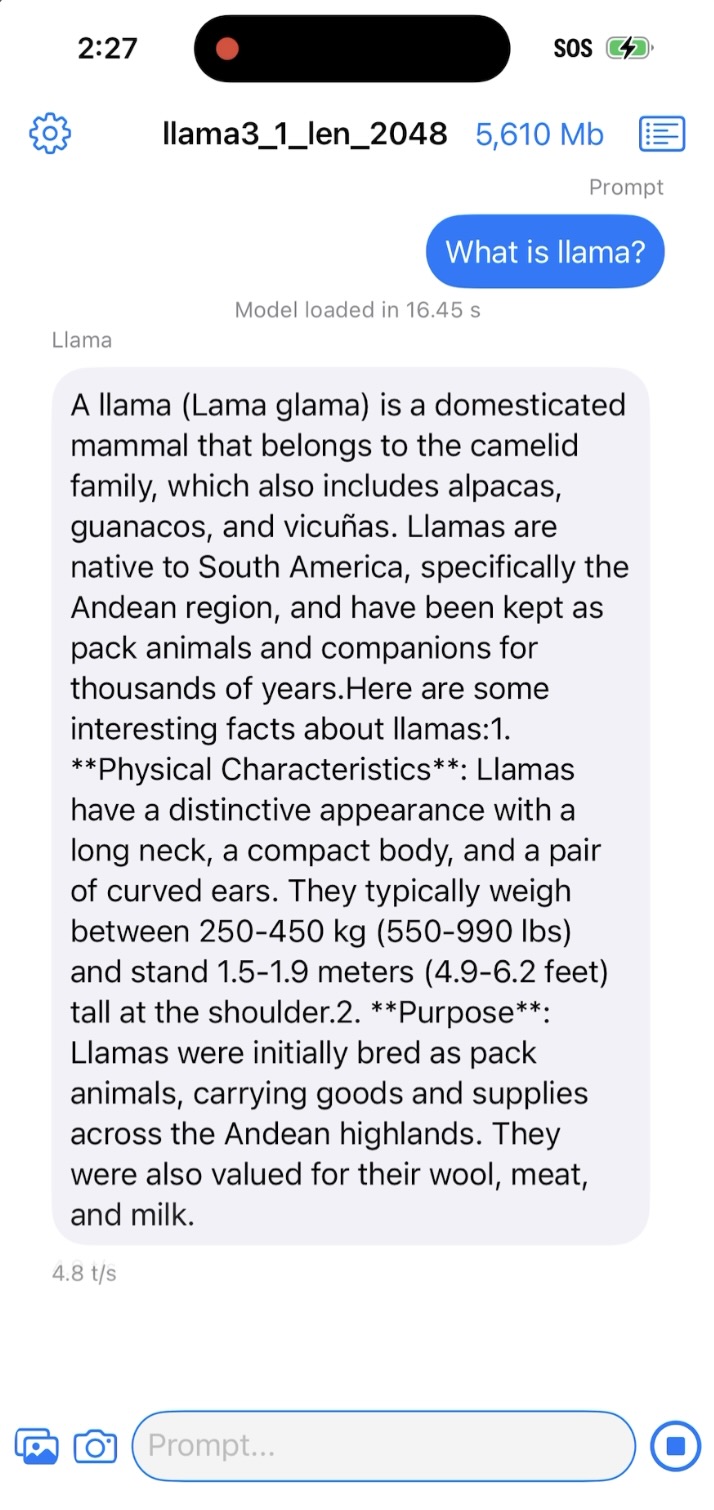

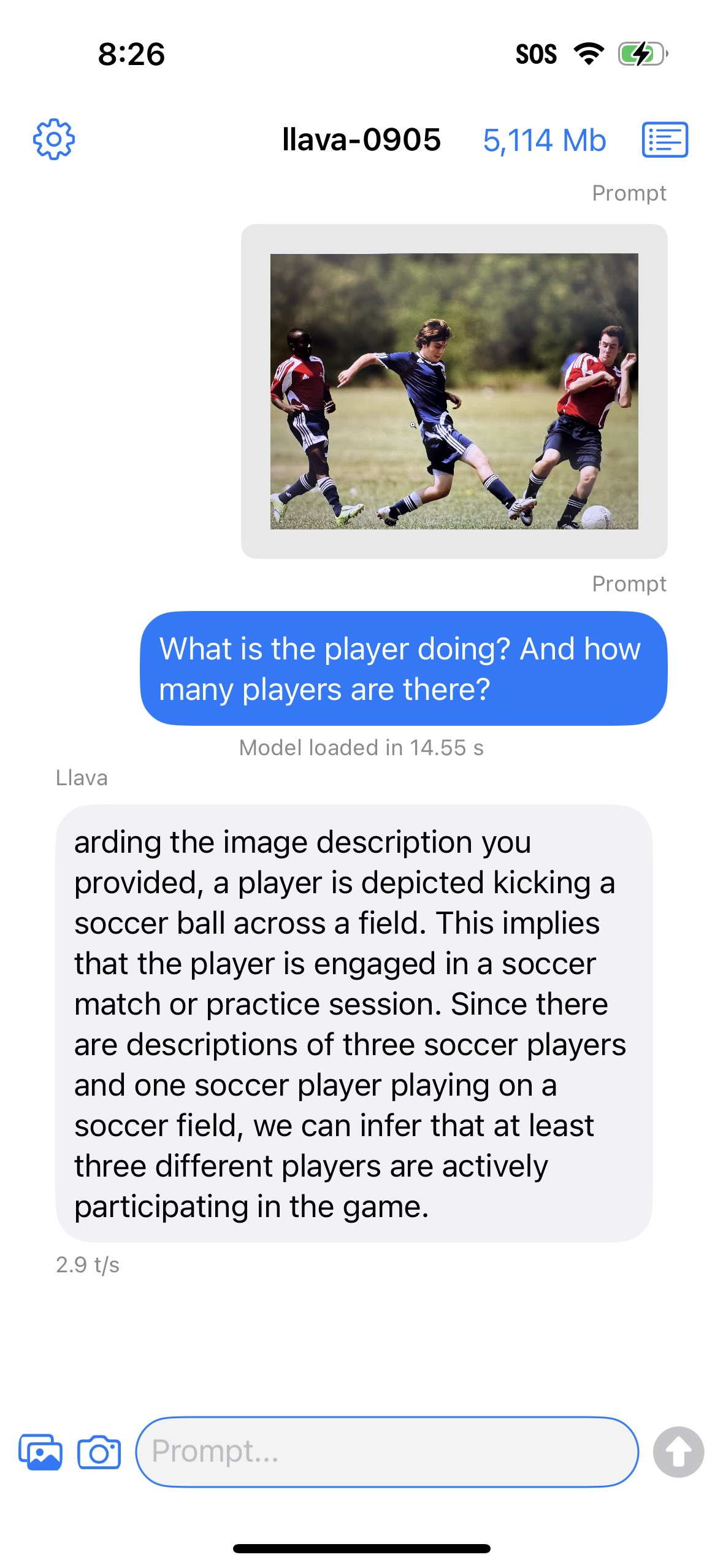

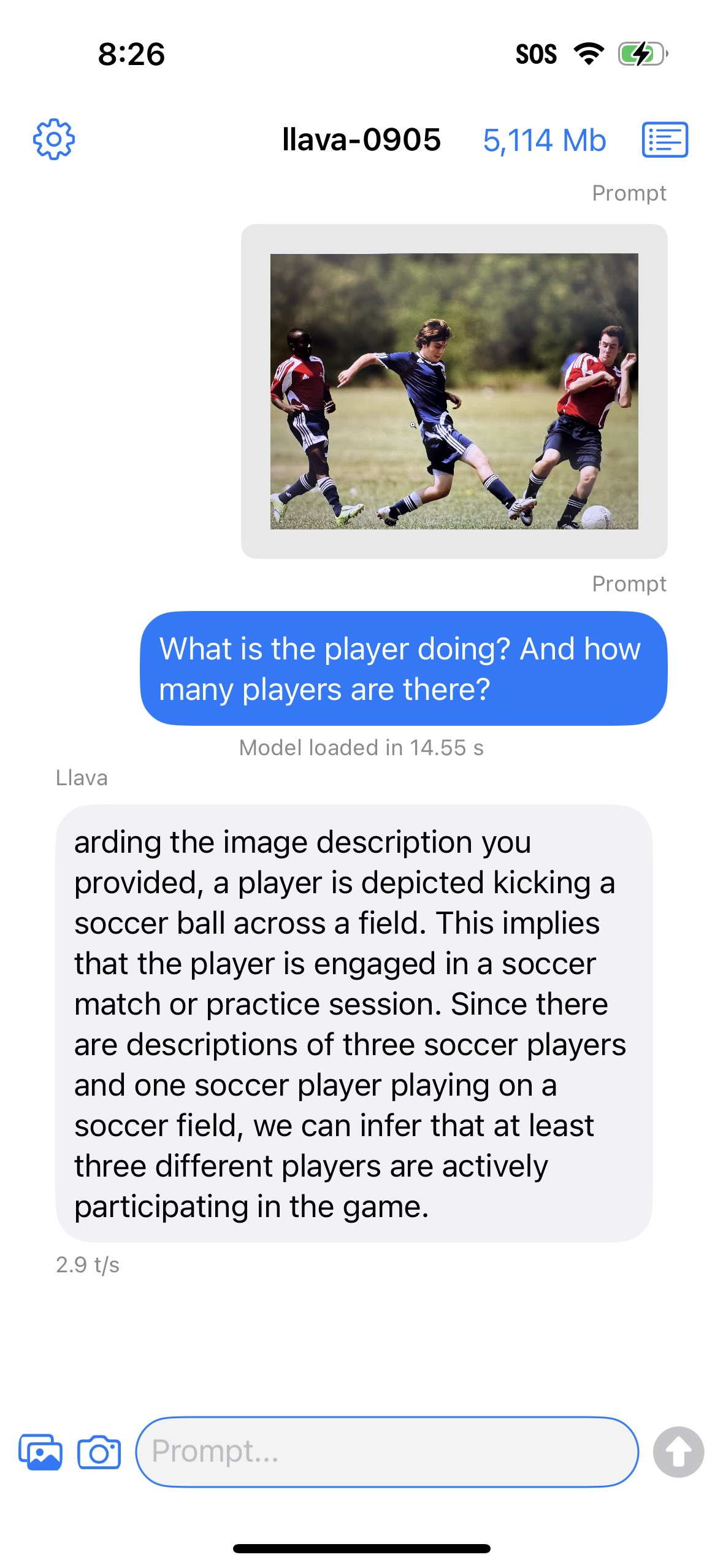

+Get hands-on with running LLaMA and LLaVA models — exported via ExecuTorch — natively on your iOS device!

-We’re excited to share that the newly revamped iOS demo app is live and includes many new updates to provide a more intuitive and smoother user experience with a chat use case! The primary goal of this app is to showcase how easily ExecuTorch can be integrated into an iOS demo app and how to exercise the many features ExecuTorch and Llama models have to offer.

-

-This app serves as a valuable resource to inspire your creativity and provide foundational code that you can customize and adapt for your particular use case.

-

-Please dive in and start exploring our demo app today! We look forward to any feedback and are excited to see your innovative ideas.

-

-## Key Concepts

-From this demo app, you will learn many key concepts such as:

-* How to prepare Llama models, build the ExecuTorch library, and perform model inference across delegates

-* Expose the ExecuTorch library via Swift Package Manager

-* Familiarity with current ExecuTorch app-facing capabilities

-

-The goal is for you to see the type of support ExecuTorch provides and feel comfortable with leveraging it for your use cases.

-

-## Supported Models

-

-As a whole, the models that this app supports are (varies by delegate):

-* Llama 3.2 Quantized 1B/3B

-* Llama 3.2 1B/3B in BF16

-* Llama 3.1 8B

-* Llama 3 8B

-* Llama 2 7B

-* Llava 1.5 (only XNNPACK)

-

-## Building the application

-First it’s important to note that currently ExecuTorch provides support across several delegates. Once you identify the delegate of your choice, select the README link to get a complete end-to-end instructions for environment set-up to export the models to build ExecuTorch libraries and apps to run on device:

-

-| Delegate | Resource |

-| ------------------------------ | --------------------------------- |

-| XNNPACK (CPU-based library) | [link](https://github.com/pytorch/executorch/blob/main/examples/demo-apps/apple_ios/LLaMA/docs/delegates/xnnpack_README.md)|

-| MPS (Metal Performance Shader) | [link](https://github.com/pytorch/executorch/blob/main/examples/demo-apps/apple_ios/LLaMA/docs/delegates/mps_README.md) |

-

-## How to Use the App

-This section will provide the main steps to use the app, along with a code snippet of the ExecuTorch API.

-

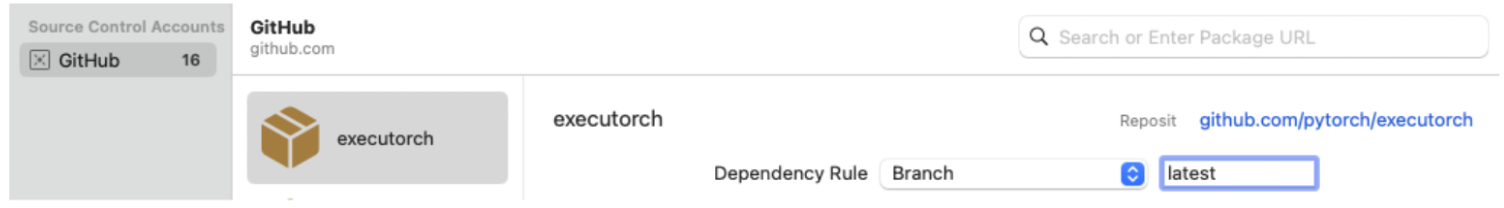

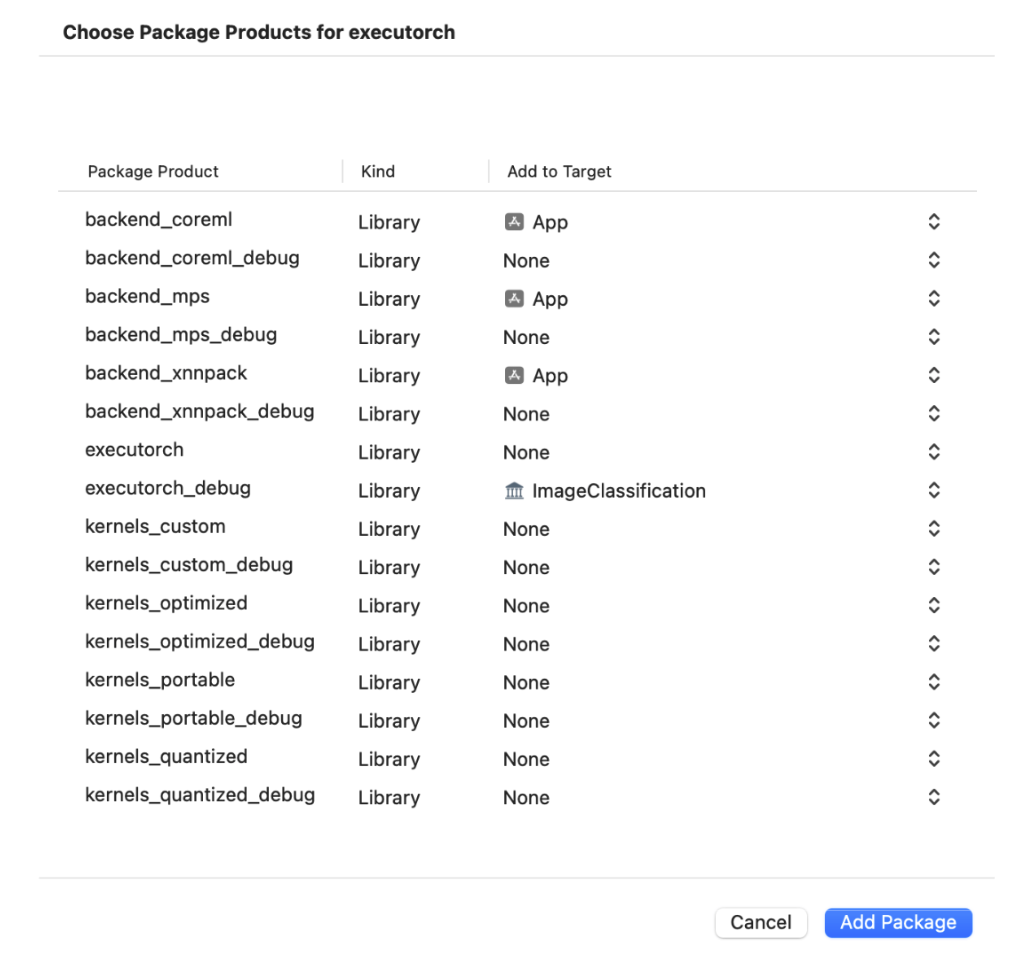

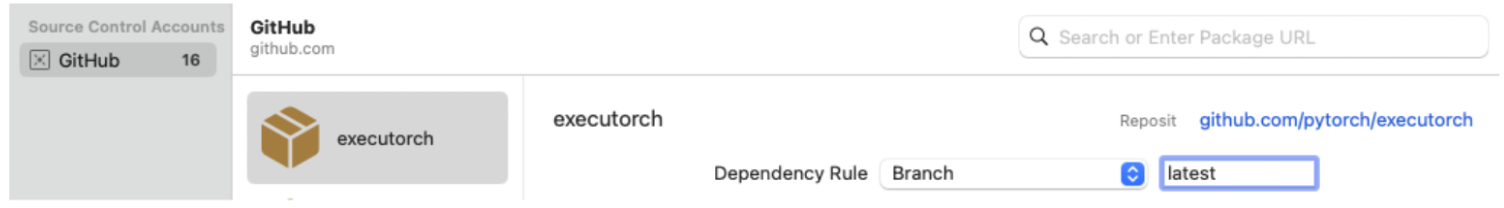

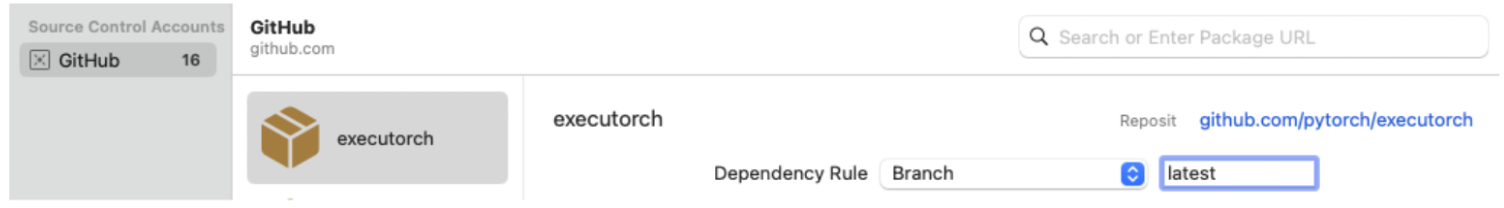

-### Swift Package Manager

-

-ExecuTorch runtime is distributed as a Swift package providing some .xcframework as prebuilt binary targets.

-Xcode will download and cache the package on the first run, which will take some time.

-

-Note: If you're running into any issues related to package dependencies, quit Xcode entirely, delete the whole executorch repo, clean the caches by running the command below in terminal and clone the repo again.

-

-```

-rm -rf \

- ~/Library/org.swift.swiftpm \

- ~/Library/Caches/org.swift.swiftpm \

- ~/Library/Caches/com.apple.dt.Xcode \

- ~/Library/Developer/Xcode/DerivedData

-```

-

-Link your binary with the ExecuTorch runtime and any backends or kernels used by the exported ML model. It is recommended to link the core runtime to the components that use ExecuTorch directly, and link kernels and backends against the main app target.

-

-Note: To access logs, link against the Debug build of the ExecuTorch runtime, i.e., the executorch_debug framework. For optimal performance, always link against the Release version of the deliverables (those without the _debug suffix), which have all logging overhead removed.

-

-For more details integrating and Running ExecuTorch on Apple Platforms, checkout this [link](https://pytorch.org/executorch/0.6/using-executorch-ios).

-

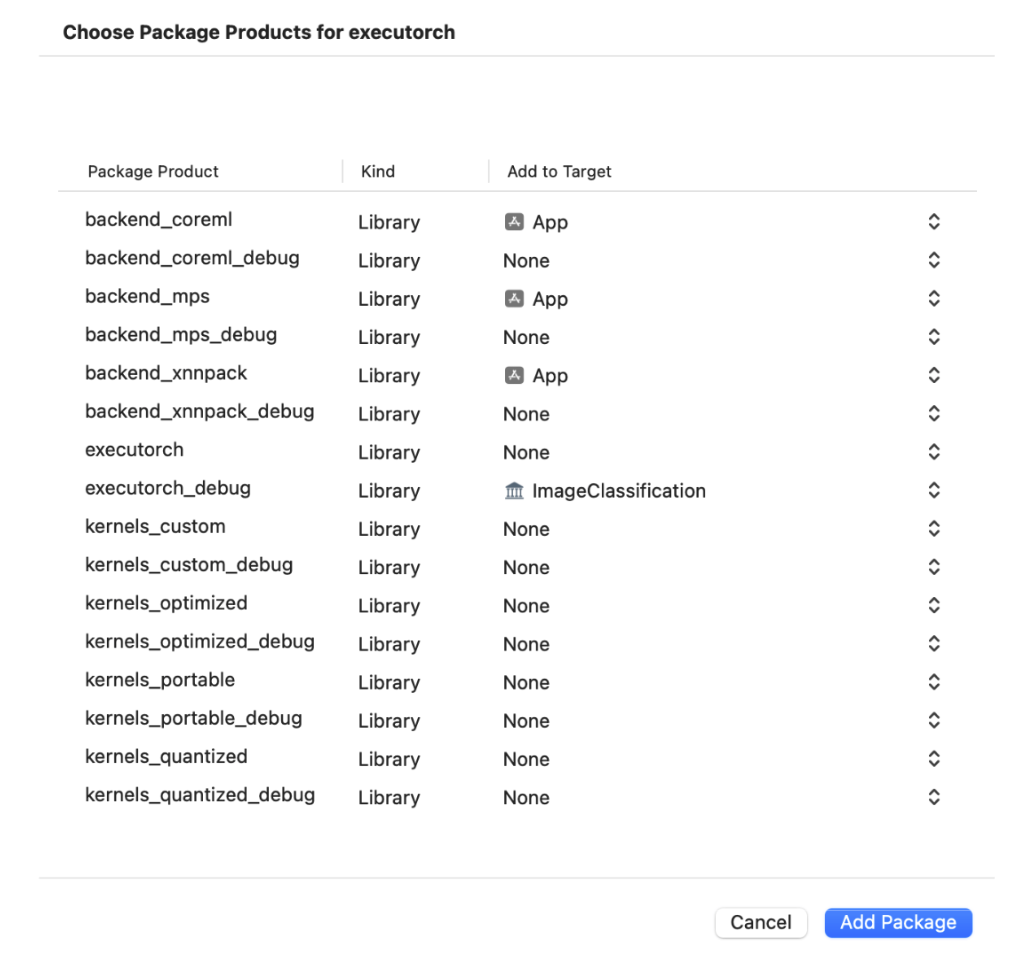

-### XCode

-* Open XCode and select "Open an existing project" to open `examples/demo-apps/apple_ios/LLama`.

-* Ensure that the ExecuTorch package dependencies are installed correctly, then select which ExecuTorch framework should link against which target.

+*Click the image below to see it in action!*

- +

+

+

+  +

+

-

- -

-

+## Requirements

+- [Xcode](https://apps.apple.com/us/app/xcode/id497799835?mt=12/) 15.0 or later

+- [Cmake](https://cmake.org/download/) 3.19 or later

+ - Download and open the macOS `.dmg` installer and move the Cmake app to `/Applications` folder.

+ - Install Cmake command line tools: `sudo /Applications/CMake.app/Contents/bin/cmake-gui --install`

+- A development provisioning profile with the [`increased-memory-limit`](https://developer.apple.com/documentation/bundleresources/entitlements/com_apple_developer_kernel_increased-memory-limit) entitlement.

-* Run the app. This builds and launches the app on the phone.

-* In app UI pick a model and tokenizer to use, type a prompt and tap the arrow buton

+## Models

-## Copy the model to Simulator

+Download already exported LLaMA/LLaVA models along with tokenizers from [HuggingFace](https://huggingface.co/executorch-community) or export your own empowered by [XNNPACK](docs/delegates/xnnpack_README.md) or [MPS](docs/delegates/mps_README.md) backends.

-* Drag&drop the model and tokenizer files onto the Simulator window and save them somewhere inside the iLLaMA folder.

-* Pick the files in the app dialog, type a prompt and click the arrow-up button.

+## Build and Run

-## Copy the model to Device

+1. Make sure git submodules are up-to-date:

+ ```bash

+ git submodule update --init --recursive

+ ```

-* Wire-connect the device and open the contents in Finder.

-* Navigate to the Files tab and drag&drop the model and tokenizer files onto the iLLaMA folder.

-* Wait until the files are copied.

+2. Open the Xcode project:

+ ```bash

+ open examples/demo-apps/apple_ios/LLaMA/LLaMA.xcodeproj

+ ```

+

+3. Click the Play button to launch the app in the Simulator.

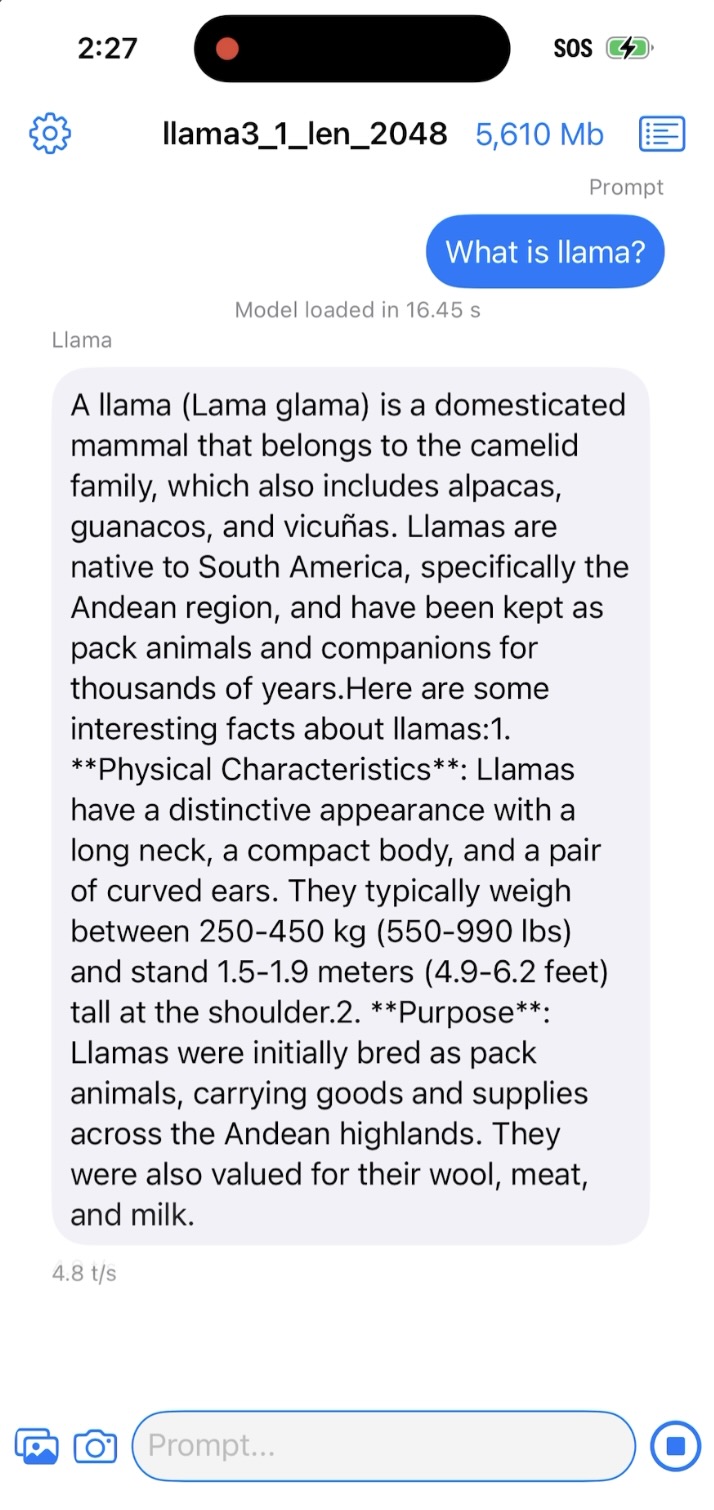

-If the app successfully run on your device, you should see something like below:

+4. To run on a device, ensure you have it set up for development and a provisioning profile with the `increased-memory-limit` entitlement. Update the app's bundle identifier to match your provisioning profile with the required capability.

-

- -

-

+5. After successfully launching the app, copy the exported ExecuTorch model (`.pte`) and tokenizer (`.model`) files to the iLLaMA folder.

-For Llava 1.5 models, you can select and image (via image/camera selector button) before typing prompt and send button.

+ - **For the Simulator:** Drag and drop both files onto the Simulator window and save them in the `On My iPhone > iLLaMA` folder.

+ - **For a Device:** Open a separate Finder window, navigate to the Files tab, drag and drop both files into the iLLaMA folder, and wait for the copying to finish.

-

- -

-

+6. Follow the app's UI guidelines to select the model and tokenizer files from the local filesystem and issue a prompt.

-## Reporting Issues

-If you encountered any bugs or issues following this tutorial please file a bug/issue here on [Github](https://github.com/pytorch/executorch/issues/new).

+For more details check out the [Using ExecuTorch on iOS](../../../../docs/source/using-executorch-ios.md) page.

+

+

+

+  +

+

+

+

+

+  +

+

-

- -

- -

-