-

Notifications

You must be signed in to change notification settings - Fork 7.1k

“transforms.functional” Broken When Using Images With Alpha #2376

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Comments

|

Thanks for opening this issue! As you noted, this is a problem with Pillow, which is a dependency for torchvision. I would like to note that we are progressively adding support for the transforms to work directly on Tensors, see #1375 and #2292, so that in the near future you'll be able to directly call those transforms in tensors, which should support alpha channels as well, and this issue will be fixed. Until then, I would recommend opening an issue in Pillow with the issue so that they can look into fixing it there as well.

I'm not sure what you meant there -- |

|

Glad to see tensors will be supported directly, PIL dependency added issues like performance and number of channels that I had to live with or write my own workarounds for them.

I don't think this is an issue for PIL since it is a library meant for images, and zeroing out pixels that won't be seen due to transparency makes sense for them.

Ah, looks like I mixed the "opencv_transforms" version when testing, disregard that then. |

well, I'm not sure I agree. the alpha channel seems to be handled as a separate channel in their interpolation kernels, so this might be a bug? |

|

The underlying issue is that in PIL -in the transform function which is called by functions like rotate- they explicitly convert "RGBA" images to premultiplied alpha format "RGBa" which multiplies the RGB component by the alpha permanently zeroing out RGB data, then they work on the the image already premultiplied, then convert back to "RGBA". So yeah, it's not a bug for them, it is pretty deliberate. You can replicate the issue minimally with |

|

Ok, makes sense. @vfdev-5 is currently working on making the remaining transforms in torchvision that depends on PIL to also work directly with torch Tensors by using torch operations, so this will be fixed (when using torch Tensors) in the next few weeks |

|

Now all transforms work on Tensor as well, so that I believe this issue should be fixed if you convert the PIL image to Tensor and apply the transforms directly on Tensor. @vfdev-5 can you double-check and close the issue if that's the case? |

|

Currently, if we pass input to torch tensor and apply a rotation it gives the following result import torch

...

img = Image.open('test.png')

np_img = np.asarray(img)

t_img = torch.from_numpy(np_img).permute(2, 0, 1)

out = TF.rotate(t_img, 30)

np_out = out.permute(1, 2, 0).numpy()

print(np_out.shape)

plt.figure(figsize=(20, 7))

for i in range(np_out.shape[-1]):

plt.subplot(1, 4, i + 1)

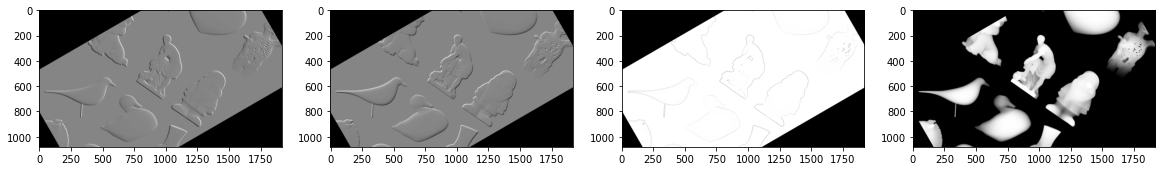

plt.imshow(np_out[:, :, i], cmap='gray')vs PIL So, the image data with alpha = 0 inside the image remains present in rotated image, but fill value should be adapted according to the application. Let's close the issue as solved and @Huud feel free to reopen if you need more support on that. Thanks |

🐛 Bug

Some “torchvision.transforms.functional” transformations such as “TF.rotate” and “TF.resize” break the image when the image is in RGBA format, as you can see here:

The blue channel before the rotation is left, and after it in the right, everything in where the alpha channel is black was also made black in the other channels.

Steps to reproduce the behavior:

1-Get an image with alpha channel, this is the one I used:

2- Apply some of these functions to it, like this:

Expected behavior:

It should act similar to the "transforms.RandomRotation" - which works properly - where the data in the first three channels are not affected by the data in the forth channel. Not all problems using "vision" are images, let alone all forth channels are transparency. For example, in my case this is a vector bump map where the first three channels are XYZ normal components, and the forth is a height component.

Note this bug happens because pytorch uses PIL's built it functions like the rotate function which are not meant for data science, and just throw away data from the previous channels when the corresponding forth channel value is zero.

Workaround:

Use opencv_transforms library instead which works properly, it is a rewrite of Pytorch’s transforms to be OpenCV based instead of PIL, so it’s also faster:

https://github.com/jbohnslav/opencv_transforms

The text was updated successfully, but these errors were encountered: