-

Notifications

You must be signed in to change notification settings - Fork 5.9k

Closed

Labels

User用于标记用户问题用于标记用户问题

Description

用的paddle.infer() 方法,每输入2000条元素执行一次这个方法,主要部分代码如下:

input_data = []

res = []

ids = []

count = 0

out_file = open("./data.infer.user", "w");

with open("./data.user") as file:

for line in file:

data = line.strip().split("\t")

if len(data) == 2:

input_data.append([get_sparse_index_data(data[1]), get_sparse_index_data([])])

ids.append(data[0])

elif len(data) == 3:

input_data.append([get_sparse_index_data(data[1]), get_sparse_index_data(data[2])])

ids.append(data[0])

else:

continue

count += 1

if count == 2000:

res = paddle.infer(output_layer=user_features, parameters=parameters, input=input_data, feeding=feeding)

for index in range(0, count):

list = res[index]

output = ''

for i in range(0, 32) :

output += str(round(list[i], 4)) + " "

out_file.write(ids[index] + "\t" + output.strip() + "\n")

input_data = []

ids = []

count = 0

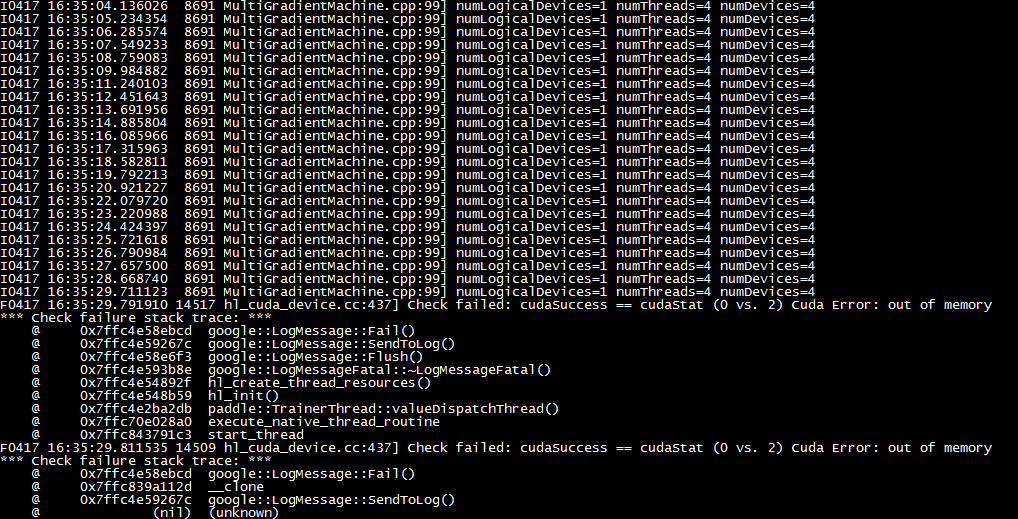

每次运行一段时间就会报出 Cuda Error: out of memory

请问这个是paddle的问题,还是python的问题?

Metadata

Metadata

Assignees

Labels

User用于标记用户问题用于标记用户问题