-

Notifications

You must be signed in to change notification settings - Fork 1.3k

Description

Problem and repro

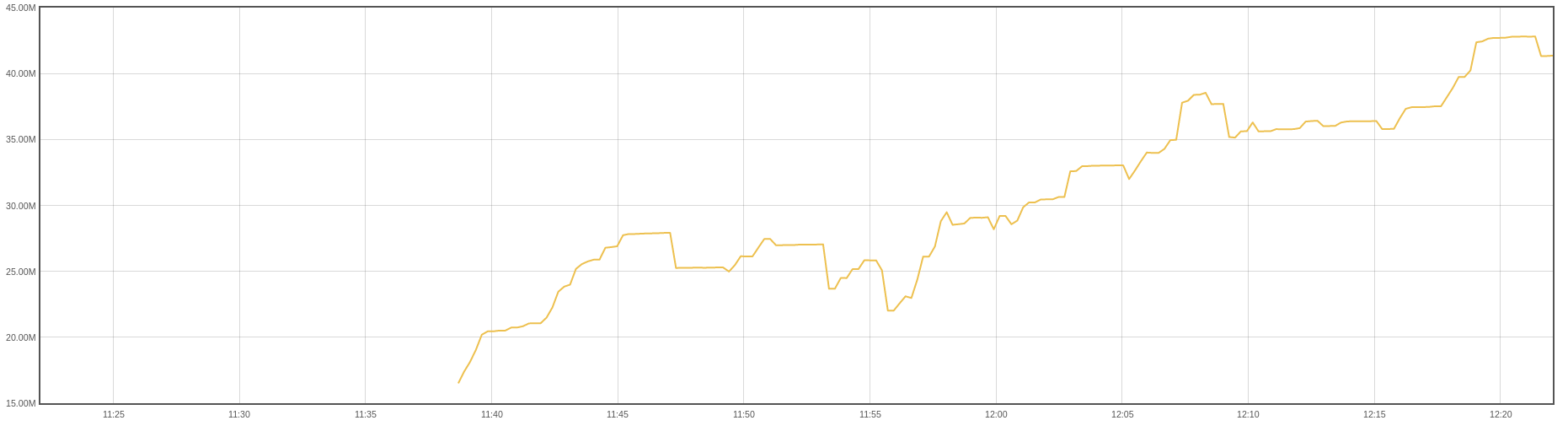

In some situations the destination container sees its memory consumption progressively augment until it gets OOM killed. We have been able to reproduce that by rolling out emojivoto's pods every 30s (here the rollouts started at 11:55):

(tracking here container_memory_working_set_bytes{container="destination"}, which is the metric the OOM killer watches)

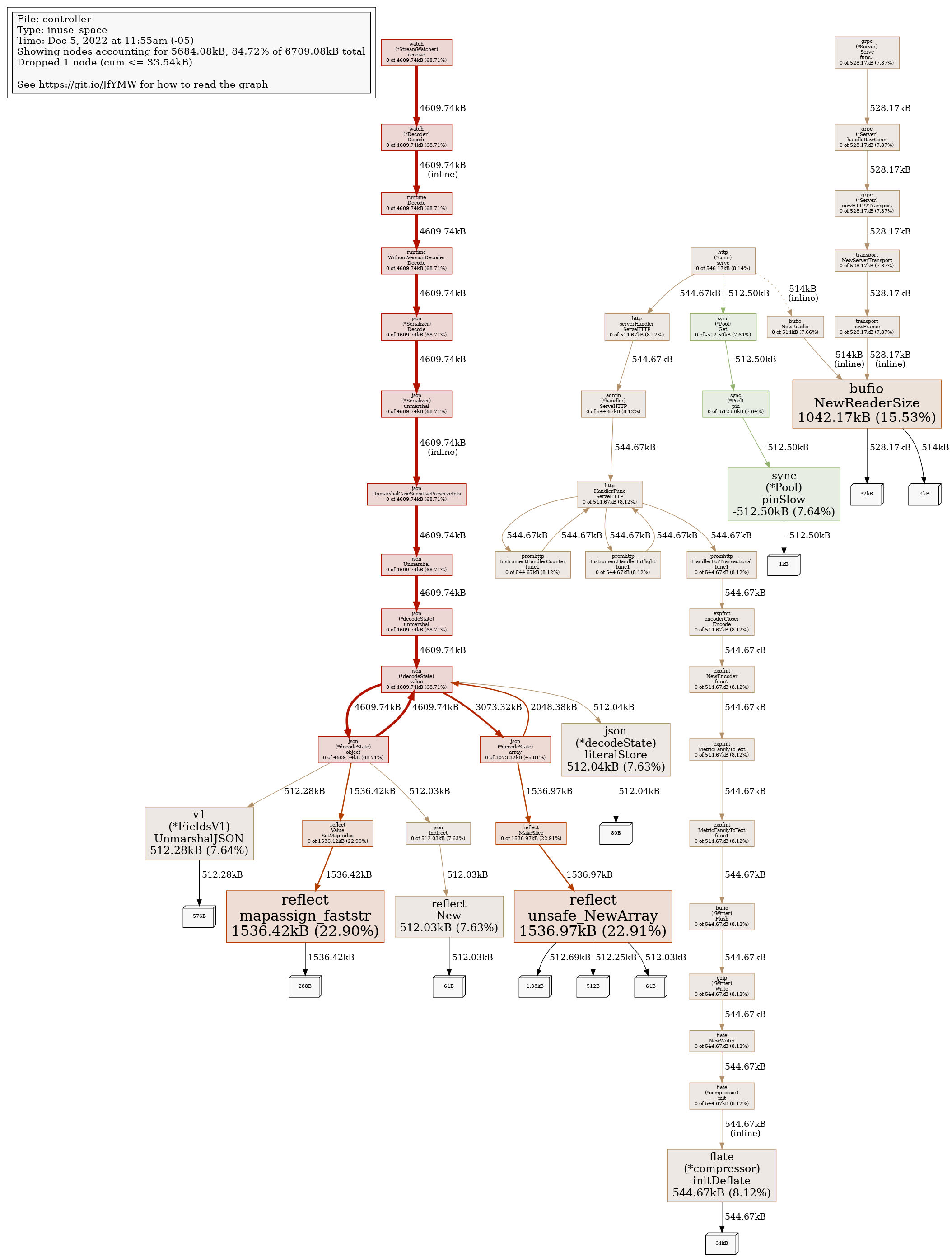

We took heap dumps before starting the rollouts and 25m after, and generated this comparative result:

# (using edge-22.12.1)

$ k -n linkerd port-forward linkerd-destination-5dd6c5985f-sl2jz 9996 &

$ curl http://localhost:9996/debug/pprof/heap?gc=1 > heap-before.out

$ while true; do kubectl -n emojivoto rollout restart deploy ; sleep 30; done

# wait 10m

$ curl http://localhost:9996/debug/pprof/heap?gc=1 > heap-after.out

$ go tool pprof -png --base heap-before.out heap-after.outThis shows 68.71% of the allocated memory during this time span was requested by k8s.io/apimachinery/pkg/watch.(*StreamWatcher).receive, so not linkerd's fault.

GC tuning

Lowering GOGC down to 1 forces GC to run as frequently as possible, but the curve above just smoothens up while overall memory consumption and trend remains the same.

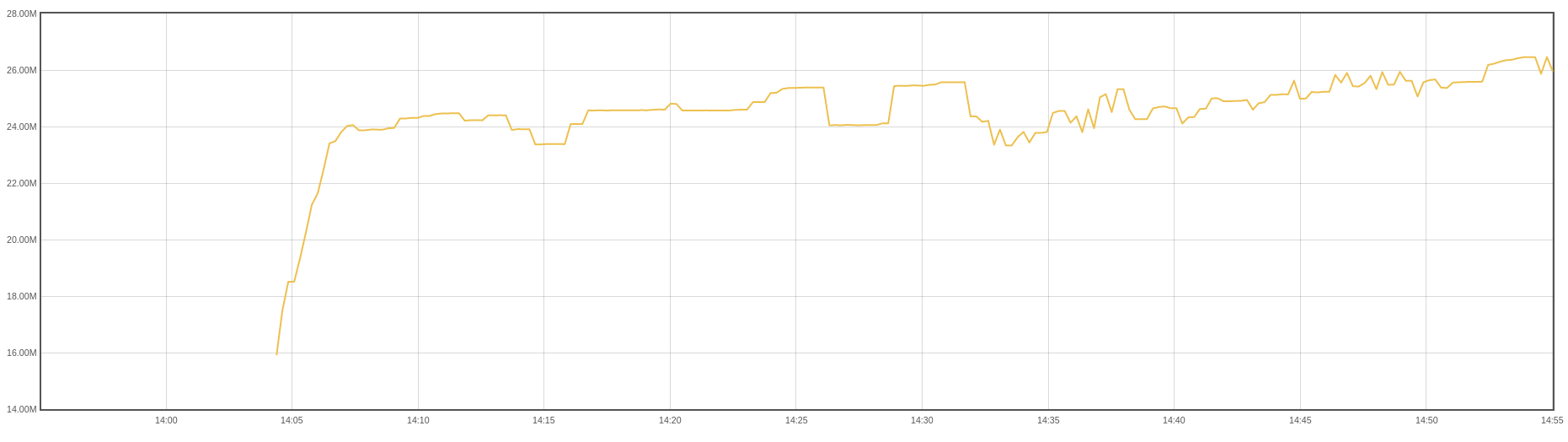

However, using GOMEMLIMIT (introduced in go 1.19) helps out. If we set it to 35MiB we get this, triggering the rollouts at 14:32:

Before, we went from 26MB up to 42MB during the rollouts, and now from 25MB to 26MB in about the same time. Memory usage still climbs, but not as bad as before.

GOMEMLIMIT requires go 1.19, and it's actually recommended to use in containers with a memory limit; according to A Guide to the Go Garbage Collector:

Do take advantage of the memory limit when the execution environment of your Go program is entirely within your control, and the Go program is the only program with access to some set of resources (i.e. some kind of memory reservation, like a container memory limit).

A good example is the deployment of a web service into containers with a fixed amount of available memory.

In this case, a good rule of thumb is to leave an additional 5-10% of headroom to account for memory sources the Go runtime is unaware of.

Course of action

Besides bumping go to 1.19 in all the base images, we can add the env var GOMEMLIMIT to the manifests only if the helm value memory.limit exists (values-ha.yaml sets it for the control plane containers), set to 90% its value.

Ref #8270