-

Notifications

You must be signed in to change notification settings - Fork 1.7k

Optimize duplicate rule ID check #1735

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

As already commented on #1667, disable the check or make it faster does not fix the issue #1663, instead put it under the carpet. IMHO, the problem is a race condition related to the nginx worker life cycle. This patch fix a symptom not the real problem. In a different setup (amount of rules, memory, vhosts, etc...) the problem is likely to appear again. As this is the current most notice symptom for the worker life cycle problem, I am holding on the merge till we fix the main problem. |

Replace an exponential search function with a stl set search.

13c6feb to

197eab2

Compare

|

Updated the commit message to remove the reference to #1663. This may have tangential impact but we do not consider it a fix for the issue noted at this time. |

|

@zimmerle curious as to your thoughts here, is this still being considered for merge at all? |

Based on the findings listed on #1735

|

Hi @p0pr0ck5, I am sorry for the delay with this review. I am afraid we won't be able to merge the patch as is. It took a while because I think you deserve to know in details why I am not accepting it, and I just had the chance to write about it today. In a simple paragraph: The description of the patch doesn't seem strictly accurate, I'm failing to see an exponential problem (not in this case). Your patch effectively improved the speed by creating a cache structure to easily find duplicate IDs, at the cost of memory usage which One thing that we do agree with you is that there is space for improvements in this articular piece of code. But first let me go over the gory technical details to widen our audience. The Problem Description In the commit message there's a mention about the search function being exponential. Just so we're all on the same page, In Big-O notation something is exponential when the time to process increases exponentially by the amount of inputs. For instance, the Selection Sort which is O(n^2) in best, average and worst case. This is not the case of this ModSecurity function as it have a "for" inside a "for" with two different sets. Exponential Search is also the name of a very popular search algorithm for infinity sets, which is actually O(log i) in average time complexity. I guess you are not referring to that either. That is the theoretical part of the review, as in practice, it may lead to a exponential problem, Let's test that in the field. Fortunately, that is not the case either. I will explain: At this point I would like to introduce the test app called load.cc, it is meant to help us testing the numbers in practice. It could be used by anyone that also wants to help us during this process. The load.cc app The load.cc app stress the mechanism in question with (almost) zero interferences of other components, in order to give us better comparative results and how much improvement was really made. The app is available here: It does mimic a use case when a giant rule set is loaded and folders and/or vhosts are merged with their rules with the intention of tuning the main rule set. All stressed with an abnormal amount of rules. Checking if there is any exponential problem at all Using the load.cc tool we can confirm that the merge/loading time is not actually

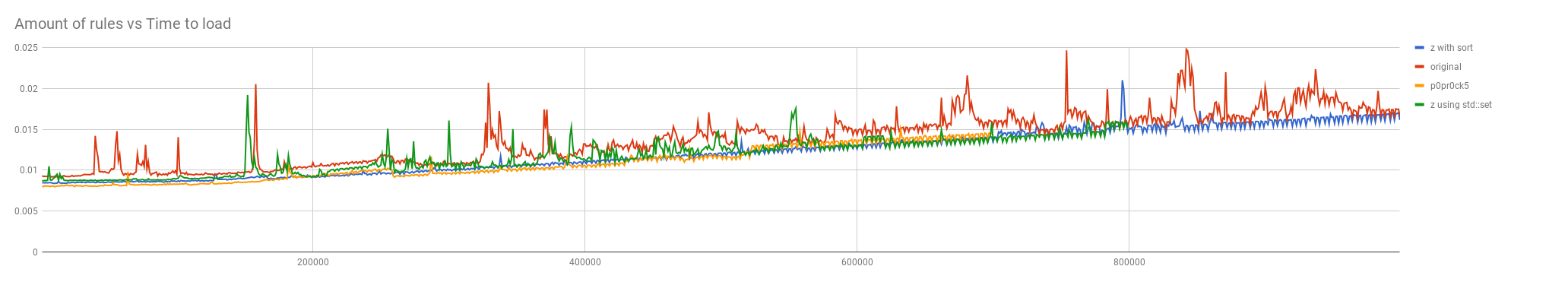

The conclusion is very clear: there is no exponential problem. Not theoretical, Understanding the patch There is a change in the function signature: instead of receiving the original 08f9a7f#diff-06c9e7fe9d6fb2c786762bf46bccad96L429 And here some variable renaming: 08f9a7f#diff-06c9e7fe9d6fb2c786762bf46bccad96L434 I believe the goal on both aforementioned changes is not improving the performance. Moving on... Here we have some an important logical change: 08f9a7f#diff-06c9e7fe9d6fb2c786762bf46bccad96L438 There is the replacement of one for loop with a std::find that loops on this new structure called m_ruleIds. The structure itself was declared here: 08f9a7f#diff-06c9e7fe9d6fb2c786762bf46bccad96R501 So, the patch effectively creates a caching structure that holds the data for a Is there a benefit to apply that patch? There is a real benefit to apply this patch. It will speed up the rules loading process a little. Depending on the scenario, it could be as fast as 2x. However it does use memory in a non ideal shape, as it stores something in memory that is already in memory: the rule id. So, let's read the patch again to understand the real need for this caching. The problem is definitely this for on line 438: So, we thought that instead of creating a caching to persist for the existence of the process, why not to create a stack cache disposed by the end of this validation? That was exactly what was done here: Notice that it is not optimal, it is using std::binary_search in a std::vector, in reality we just need to have a simple array and store the integers for a fast check, but i guess it is safe to live with it now. The charts below illustrates in details all the concerns that were raised during this study.

Having said all that, we hereby thanks @p0pr0ck5 for the work invested in this patch, for bringing this issue to light allowing us to invest more time improving it. Therefore we are proceeding to close it without accepting fully for the reasons pointed out above. Instead, we've added the modifications linked here to the master branch. We're open to any question or concerns that anyone may have. |

|

Hey @zimmerle, Thanks for the detailed write up. However I wanted to highlight something from your findings that does not correctly demonstrate the originally reported behavior (within the C API, and thus the Nginx connector). I found significantly different results when using a C harness instead of a C++ program to load many rulesets as laid out here: https://gist.github.com/p0pr0ck5/c5045eb3b6107917db771be3ef1df08b. The C harness shows behavior that is indeed exponential (please bear the very basic mock/graph): I believe the reason for this is that your mock creates a new This is not the same behavior as leveraged by the C API, which implements a single Thus your benchmark doesn't demonstrate an exponential time because that behavior in question is not exponential. :) What is exponential (or was, prior to What's more, your example could not correctly detect a duplicate ID between two different rulesets, only duplicate IDs loaded within the same call to This is all a moot point now that the behavior has been modified, but I did want to bring to light the disparity between the behavior seen within Nginx (originally reported in #1663), and the test harness you wrote. Thanks! |

Replace an expotential search function with a stl set search.