-

-

Notifications

You must be signed in to change notification settings - Fork 1.1k

Did copy(deep=True) break with 0.16.1? #4449

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Comments

|

Thanks for the issue @blaylockbk I think this is caused by #4426, which defers to copy-on-write rather than make an extra copy. Do you need this behavior per se? |

|

Would |

|

The work around is to call The direct source of the issue here is: #4379 We probably should have been more careful with that change, because it is technically a regression. Previously we would always load data into NumPy arrays when doing a deep copy, but after that change the underlying data structures are deep-copied rather than being loaded into NumPy. That's probably more consistent with what users would expect in general from a "deep copy" but is definitely a change from the previous behavior. |

|

Thanks for the quick reply and for that pointer to use |

Intended to fix pydata#4449 Needs tests!

What happened: I have a script that downloads a file, reads and copies it to memory with

ds.copy(deep=True), and then removes the downloaded file from disk. In 0.16.1, I get an error "No such file or directory" when I try to read the data from the deep-copied Dataset as if the Dataset was not actually copied into memory.What you expected to happen: In 0.16.0 and earlier, the variable data is available (

ds.varName.data) after it is copied into memory even after the original file was removed. But this doesn't work anymore in 0.16.1.Minimal Complete Verifiable Example:

Anything else we need to know?:

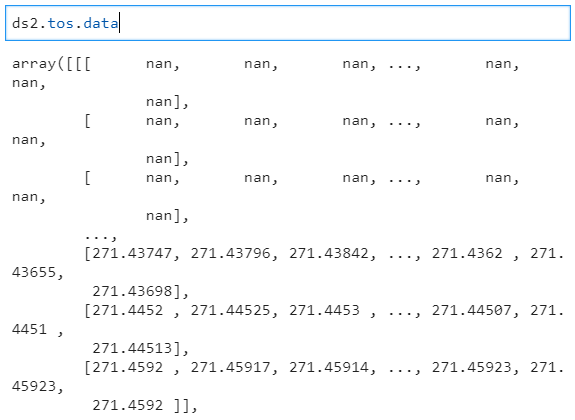

Output for xarray v0.16.0

Output for xarray v0.16.1

FileNotFoundError: [Errno 2] No such file or directory: ...tos_O1_2001-2002.nc'Environment:

Output of xr.show_versions() for xarray 0.16.0

INSTALLED VERSIONS

commit: None

python: 3.8.5 | packaged by conda-forge | (default, Sep 16 2020, 17:19:16) [MSC v.1916 64 bit (AMD64)]

python-bits: 64

OS: Windows

OS-release: 10

machine: AMD64

processor: Intel64 Family 6 Model 142 Stepping 12, GenuineIntel

byteorder: little

LC_ALL: None

LANG: None

LOCALE: English_United States.1252

libhdf5: 1.10.6

libnetcdf: 4.7.4

xarray: 0.16.0

pandas: 1.1.2

numpy: 1.19.1

scipy: 1.5.0

netCDF4: 1.5.4

pydap: None

h5netcdf: None

h5py: 2.10.0

Nio: None

zarr: None

cftime: 1.2.1

nc_time_axis: None

PseudoNetCDF: None

rasterio: None

cfgrib: 0.9.8.4

iris: None

bottleneck: None

dask: None

distributed: None

matplotlib: 3.3.2

cartopy: 0.18.0

seaborn: None

numbagg: None

pint: 0.16

setuptools: 49.6.0.post20200917

pip: 20.2.3

conda: None

pytest: None

IPython: 7.18.1

sphinx: None

Output of xr.show_versions() for xarray 0.16.1

INSTALLED VERSIONS

commit: None

python: 3.8.5 | packaged by conda-forge | (default, Sep 16 2020, 17:19:16) [MSC v.1916 64 bit (AMD64)]

python-bits: 64

OS: Windows

OS-release: 10

machine: AMD64

processor: Intel64 Family 6 Model 142 Stepping 12, GenuineIntel

byteorder: little

LC_ALL: None

LANG: None

LOCALE: English_United States.1252

libhdf5: 1.10.6

libnetcdf: 4.7.4

xarray: 0.16.1

pandas: 1.1.2

numpy: 1.19.1

scipy: 1.5.0

netCDF4: 1.5.4

pydap: None

h5netcdf: None

h5py: 2.10.0

Nio: None

zarr: None

cftime: 1.2.1

nc_time_axis: None

PseudoNetCDF: None

rasterio: None

cfgrib: 0.9.8.4

iris: None

bottleneck: None

dask: None

distributed: None

matplotlib: 3.3.2

cartopy: 0.18.0

seaborn: None

numbagg: None

pint: 0.16

setuptools: 49.6.0.post20200917

pip: 20.2.3

conda: None

pytest: None

IPython: 7.18.1

sphinx: Nonexarray: 0.16.0

pandas: 1.1.2

numpy: 1.19.1

scipy: 1.5.0

netCDF4: 1.5.4

pydap: None

h5netcdf: None

h5py: 2.10.0

Nio: None

zarr: None

cftime: 1.2.1

nc_time_axis: None

PseudoNetCDF: None

rasterio: None

cfgrib: 0.9.8.4

iris: None

bottleneck: None

dask: None

distributed: None

matplotlib: 3.3.2

cartopy: 0.18.0

seaborn: None

numbagg: None

pint: 0.16

setuptools: 49.6.0.post20200917

pip: 20.2.3

conda: None

pytest: None

IPython: 7.18.1

sphinx: None

The text was updated successfully, but these errors were encountered: